All the files and code for this project are available on the Github Repo

Have you ever wondered if it’s possible to see the invisible? To amplify tiny movements that are imperceptible to the human eye? That’s exactly what the Eulerian Video Magnification technique does, and I’ve implemented it in pure JavaScript to run directly in your browser.

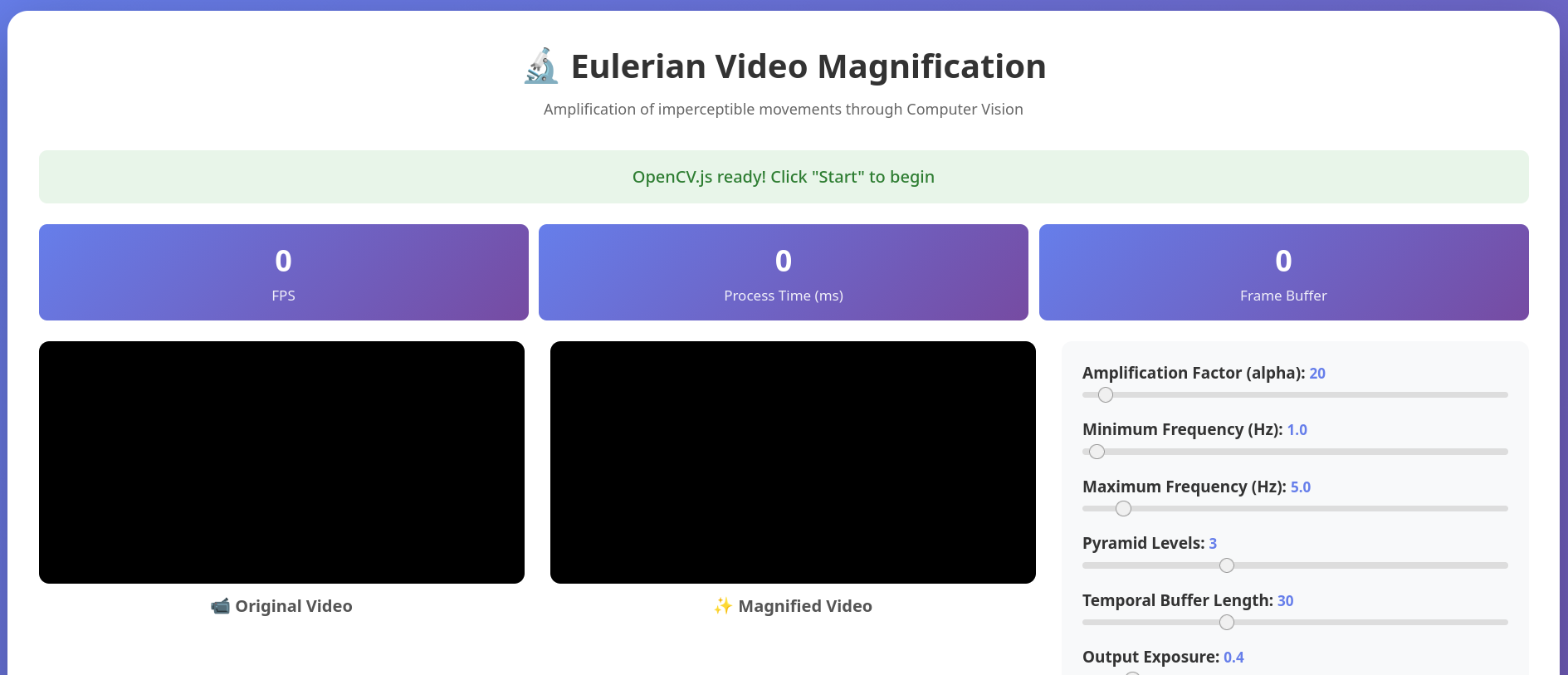

The RT-EVM-JS interface with real-time parameter controls

The RT-EVM-JS interface with real-time parameter controls

🔬 What is Eulerian Video Magnification?

Eulerian Video Magnification is a fascinating technique developed by researchers at MIT CSAIL that reveals temporal variations in videos that are invisible to the naked eye. Unlike traditional motion tracking methods (Lagrangian approach) that follow individual pixels through frames, the Eulerian approach analyzes temporal variations at fixed spatial positions.

The algorithm can amplify:

- Breathing patterns through chest movements

- Micro-vibrations in structures like buildings or bridges

- Subtle body movements and tremors

- Vibrations in objects caused by sound waves

This technique was introduced in the groundbreaking paper “Eulerian Video Magnification for Revealing Subtle Changes in the World” by Wu, Rubinstein, Shih, Guttag, Durand, and Freeman in 2012.

✨ Features

RT-EVM-JS brings this powerful algorithm to the web with a comprehensive set of features:

- Real-time webcam processing with front/back camera switching

- Video file upload support with looping playback for testing

- Adjustable parameters in real-time:

- Amplification factor (alpha)

- Frequency range (Hz) for temporal filtering

- Pyramid levels for spatial decomposition

- Temporal buffer size

- Exposure control

- Blur and noise attenuation

- Performance monitoring: FPS counter, processing time, buffer status

- Preset configurations for common use cases (heart rate, breathing, general motion)

- Video recording of magnified output

- Download capability for processed videos in WebM format

- Responsive design for desktop and mobile devices

- Privacy-focused: All processing happens client-side, no data sent to servers

Example of breathing pattern amplification. DISCLAIMER: The gif’s stuttering is caused by my bad recording software

Example of breathing pattern amplification. DISCLAIMER: The gif’s stuttering is caused by my bad recording software

🎯 How It Works

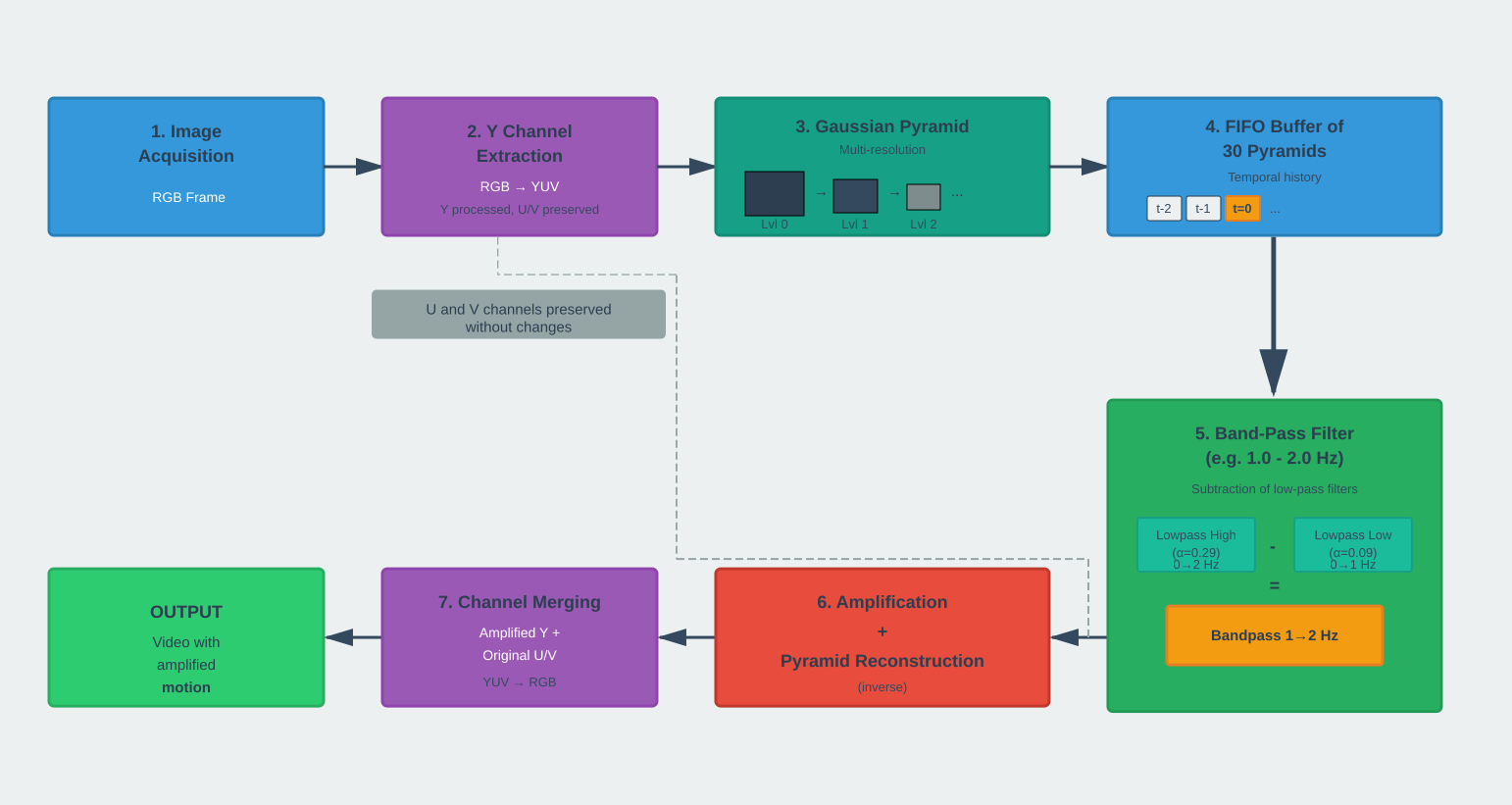

The Eulerian Video Magnification algorithm works through four main steps:

1. Spatial Decomposition

Each video frame is decomposed into different spatial frequency bands using a Gaussian pyramid. This creates multiple levels of the image at different scales, allowing the algorithm to isolate specific spatial frequencies.

2. Temporal Filtering

For each spatial band, the algorithm applies bandpass filters to isolate specific frequency ranges over time. This is where we select what kind of motion we want to amplify (e.g., 0.2-0.5 Hz for breathing, 1.0-5.0 Hz for vibrations).

3. Signal Amplification

The filtered temporal signals are multiplied by an amplification factor (alpha). This is the key step that makes invisible motions visible.

4. Reconstruction

The amplified signals are combined with the original frame to produce the final magnified video. The result reveals motions that were previously imperceptible.

Simplified pipeline of the Eulerian Video Magnification algorithm

Simplified pipeline of the Eulerian Video Magnification algorithm

🚀 Technical Implementation

The implementation uses OpenCV.js, the JavaScript port of the popular OpenCV computer vision library. This allows complex image processing operations to run efficiently in the browser through WebAssembly.

Key technical aspects:

- WebRTC for real-time webcam access

- MediaRecorder API for video recording

- Canvas API for rendering

- Temporal buffering to maintain frame history for filtering

- Gaussian pyramid construction for multi-scale analysis

- Ideal bandpass filter in the frequency domain

The entire processing pipeline runs client-side, ensuring user privacy and eliminating the need for server infrastructure.

🎛️ Using RT-EVM-JS

Getting started is straightforward:

- Clone the repository:

1 2

git clone https://github.com/MrMoDDoM/RT-EVM-JS.git cd RT-EVM-JS - Serve the HTML file using any HTTP server: ```bash

Python 3

python -m http.server 8000

Node.js

npx http-server -p 8000

PHP

php -S localhost:8000 ```

Open in browser: Navigate to

http://localhost:8000/rt-evm-js.htmlStart processing: Allow webcam access, click “Avvia Elaborazione” (Start Processing), and adjust parameters in real-time

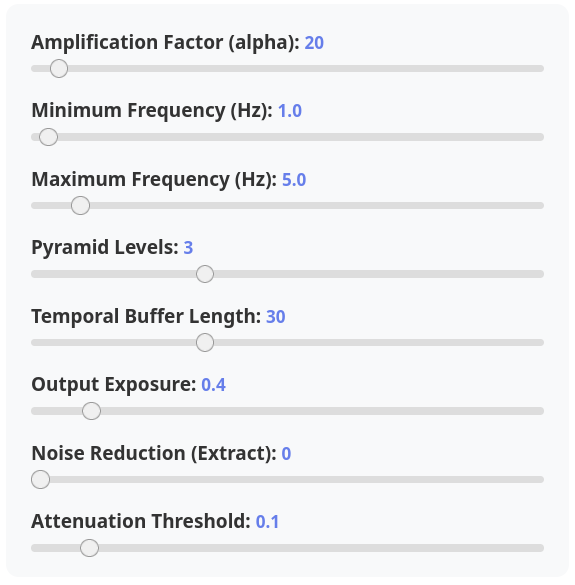

💡 Tips for Best Results

- Good lighting: Ensure adequate and stable lighting for better signal detection

- Camera stability: Use a tripod if possible to minimize camera shake

- Appropriate distance: Position yourself correctly for the feature you want to detect

- Frequency ranges: Adjust based on the motion type:

- Breathing: 0.2 - 0.5 Hz (12-30 breaths/min)

- Heart rate: 0.8 - 1.5 Hz (48-90 bpm)

- Vibrations: 1.0 - 5.0 Hz

- General motion: 0.5 - 3.0 Hz

- Buffer length: Longer buffers (30+ frames) provide more accurate filtering but increase latency

- Amplification factor: Start with lower values (5-10) and increase gradually

The parameter panel allows real-time adjustments

The parameter panel allows real-time adjustments

🔮 Future Improvements

While the current implementation focuses on motion magnification, there are several exciting directions for future development:

- Color amplification mode for detecting subtle color changes (e.g., heart rate via skin color variations)

- Automatic parameter tuning based on input video analysis

- Improved UI/UX with better visualization of frequency spectra

- ROI (Region of Interest) selection for focused frequency analysis

- Export functionality for parameter presets

- Multi-threaded processing using Web Workers for better performance

📚 Technical Background

This project is based on the seminal work:

Wu, H.-Y., Rubinstein, M., Shih, E., Guttag, J., Durand, F., & Freeman, W. T. (2012). Eulerian Video Magnification for Revealing Subtle Changes in the World. ACM Transactions on Graphics (Proceedings SIGGRAPH 2012), 31(4).

Additional resources:

⚠️ Limitations and Disclaimers

This is an educational and research implementation. For medical or critical applications, please use validated professional equipment.

Current limitations:

- Motion magnification only: Color amplification features (e.g., for heart rate detection via skin color) are not yet implemented

- Browser performance: Processing is CPU-intensive and may struggle on lower-end devices

- Lighting conditions: Requires good, stable lighting for optimal results

- Large motions: Works best with subtle, periodic movements rather than large or rapid motions

🔧 Browser Compatibility

RT-EVM-JS requires a modern web browser with:

- WebRTC support for webcam access

- WebAssembly support for OpenCV.js

- Canvas API

- MediaRecorder API (for recording functionality)

Tested and working on:

- Chrome/Chromium (recommended)

- Firefox

- Safari

- Edge

📄 License

This project is licensed under the MIT License, making it free to use, modify, and distribute.

🎓 Learning Resources

If you’re interested in learning more about video magnification and signal processing:

- MIT CSAIL EVM Project Page

- OpenCV.js Tutorials

- Digital Signal Processing Basics

- Computer Vision: Algorithms and Applications

This project demonstrates the power of modern web technologies in bringing advanced computer vision algorithms to everyone’s browser. No installation, no complex setup - just pure JavaScript magic revealing the invisible world around us.

Feel free to experiment, contribute, or use this as a foundation for your own projects. If you find it useful or create something interesting with it, I’d love to hear about it! 🚀

Comments powered by Disqus.